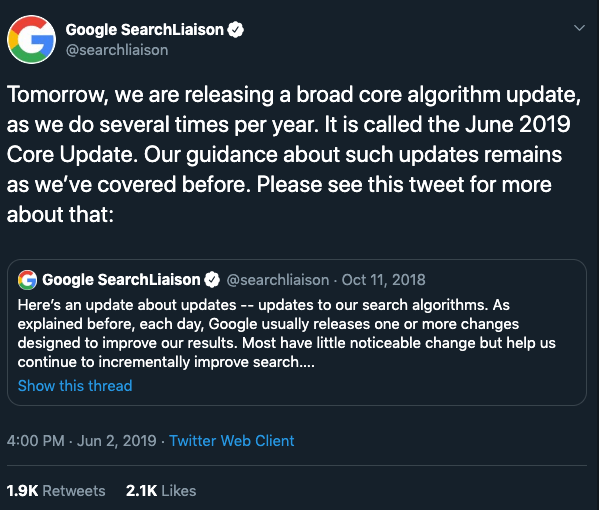

Google released another “broad core” algorithm update publicly this week.

Not sure why that would get 2k likes. Every time Google “improves” its core algorithm, thousands of small online businesses have to downsize or fold. But I digress.

What “broad core” means

Broad core can mean that all sites could be affected somewhat. More often (personal opinion here) it means that the sites that will be disproportionately affected fall into a type of site or industry, and that the entire site’s pages are affected.

What “broad core” means to you

If your site has been around for more than a year, you have likely been affected by a core update. Maybe not to a noticeable extent, or maybe you just ignore your Google traffic, but even being completely “white hat” you will probably get dinged pretty good at some point if you are not a mega brand.

The financial costs

This means that the amount of work over the following 6 to 12 months for those affected will probably cost a solopreneur/blogger type hundreds of hours and tens of thousands of dollars not just in lost revenue but in time and energy and consulting fees to figure out and recover from.

Of course, sometimes Google “overdoes it,” webmasters complain, and they scale back the effect. This happens at some point after about two to three months. They will need to “tune,” basically revise the update to account for unintended effects or fall out.

The emotional costs to this kind of roller coaster are immeasurable. Because more often then not, a few months after that, another update, and around it goes.

How to know if you are (negatively) affected by a core update

You may not know it, but whenever your search traffic increases or decreases a significant amount over the period of a few days with no other clear reason and then “stabilizes” its probably one of four things.

Check @searchliason on twitter for recent announcements around the time you saw your traffic drop

To confirm its not a manual action or what I call a “threshold” penalty, you look for evidence of Google updates, either as announced.

If it was a while ago, or you are looking at multiple dates, you can go to allmytweets.net, login with your twitter account, and search “searchliason.” After a minute or three, this will show you a scraped list of the most recent 3000 tweets from @searchliason.

Then you can search (CTRL + F / CMD + F) for “core”, “broad” keywords or your dates where your traffic dropped. You can also scroll/browse because its reverse chronological.

Check SERP volatility tool

You can also look at what’s going on in the rest of the web quite quickly. These are called SERP (search engine results pages) volatility measures.

One of the greatest contributions a rank tracking SaaS offers to the public is a “search sensor” or rankings volatility tool. Moz has Mozcast. Rank Ranger has Rank Risk index, etc..

Also, Barry Schwartz, a staple in search news, will often take screenshots after a core update and post to Search Engine Land, saving you lots of clicking around:

The best SERP volatility tool hands down

As you can see there are a dozen tools out there, some better than others. Our go to is SEMRush Sensor because it will show you volatility for mobile vs desktop and a snapshot by site type/industry for each, and it lets you filter or show changes in featured snippets and other things that can affect your traffic, like the knowledge boxes, or more local pack results, etc.,

Rule out other factors

This stuff is complex. Just because your traffic changes coincided with a Google update, does not mean you are necessarily affected by it. It could just be correlation. Maybe someone pushed a website update and the staging site’s robots config got pushed live with the site update (happened to me this week). That would mean it was unrelated.

Or factors have compounding effects. If you have a manual action or toxic link profile, or bad E-A-T (expertise, authority, trust signals) indicators, you could be disproportionately affected by an update.

It really just depends on what signals the update is attempting to account for.

Check Google Search Console

Go to this page: https://search.google.com/search-console/manual-actions

If there are no issues detected, great. If there are, address them.

Check your link profile

The easiest/fastest way to do this is to check ahrefs.com, the premier link index. Get your seven day free trial (with CC required), set an alert in your calendar for 6 days to remind yourself to cancel if need be, then log in. Once logged in go to this URL:

https://ahrefs.com/site-explorer/backlinks/v2/anchors/subdomains/historical/phrases/all/1/refdomains_dofollow_desc?target=yourdomain.com

ahrefs

** Make sure to change “yourdomain.com” to your domain name.

Alternatively, search your domain in ahrefs.com homepage once logged in and then click “Anchors” on the lefthand side. If you see anything out of place, like 100 referring domains with a period for the anchor text “.” or anything pharmaceutical or gambling or ecommerce product related but unrelated to your site, flag it. You now have to go through a link disavow process.

If you have weird backlinks, the next thing to check is your Google rankings for those terms. This will help determine if your site was hacked and unintended pages were maliciously created. Let’s say you find weird anchor text for “beats by dre headphones red” (this really happened to a client). Then go to Google and search:

site:yourdomain.com beats by dre

This will reveal any pages Google thinks are associated with the hack.

If you find evidence of a hack, start a site recovery process.

What to do and not do longer term

Warren Buffet once said something annoying like, “If you know what you’re doing, you don’t need to diversify.” It used to be that if you got a Google slap, it was your fault. You were doing something egregious and it was hurting user experiences.

But more and more these algorithm updates are less and less about improving user experience of referring users to your site’s pages under the right conditions as a search engine is supposed to do, and more about Google’s play to take over the internet by keeping users on Google search results pages, and scraping your website’s answers to show the user and keep them on Google.

Because we don’t have control over the monopoly, and we can only trust that the monopoly will always act in its own interest, we have to account for the resulting uncertainty.

You need to diversify your traffic sources. If you know you should have a pinterest strategy, start chipping away at it a little a day. Ditto for the LinkedIn.

And just know that a LOT of the most qualified Google traffic to your site is a result of word of mouth. That data is hard to see at a glance but know that it is there. This has always been the most powerful marketing and it’s only becoming more important as we move towards the webpocolapse.

Don’t turn your site on its head

Don’t take a traffic drop like this as a sign you need to turn your site on its head. Google is not the end all and be all of your life and business.

If you start hacking at your site without a lot of careful consideration as to whether it supports your core mission and business goals and users’ experiences, then you’ve really lost much more than traffic.