Loading available data about your website into a graph database can quickly provide deep and actionable insights to improve those pages in search.

In this walkthrough, you’ll see just one example line of discovery that leads us from having a bunch of silo’d data you already have like:

- dozens to hundreds of pages, their word counts

- how they are currently interlinked

- related Google Analytics organic traffic data

- related Google Search Console data

To a very clear set of instructions around:

- which pages to consolidate or keep

- which page should be redirected to

- what related pages should link to which kept page

Webpages on your site and how they are linked

I did a tut on loading Screaming Frog crawl data into Neo4j to visualize your site. I’m putting out a much more robust series of scripts to load that data on Github once I’m done working out some kinks around duplicate records.

Once loaded in, a query to return the most linked to pages shows that our homepage is being linked to about half as much as the other navigation items:

Visual inspection shows our homepage linked in the header logo, but not in the footer nav.

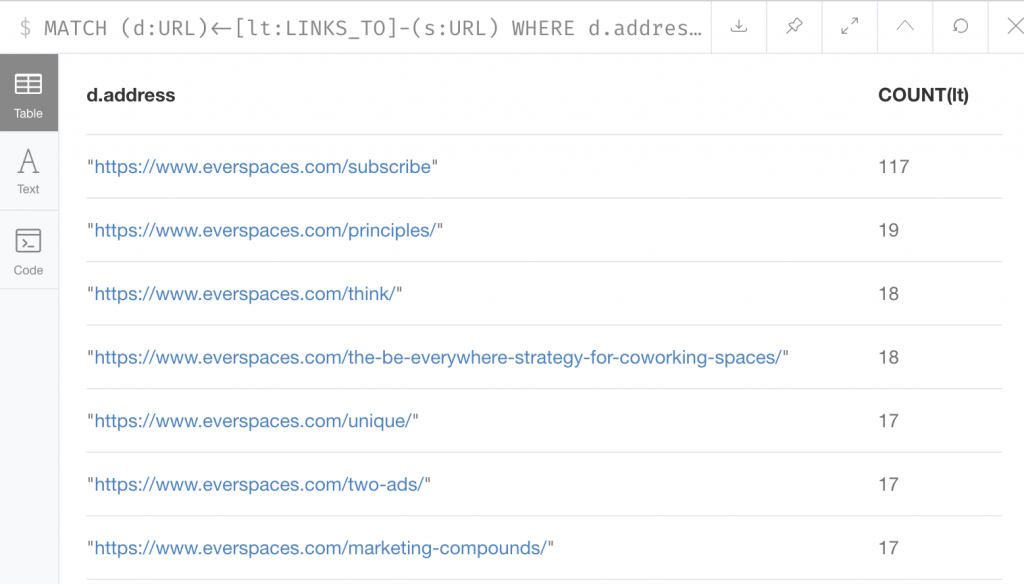

Let’s offset our search to exclude nav items and see what pages are being linked to the most:

So we have about 175 pages. But we’re linking to a /subscribe page only 117 times.

We also see a couple of posts in there. Now if we compare that list with a list of posts we might want to have the most inlinks, we have actionable data. Some examples of posts we may want to have the most inlinks are:

- those generating the most landing page subscribers

- pages we want to nudge from positions 3 to 10 to 1 to 3, identified as those that see lots of impressions in search results, but low average position or number of clicks as shown in Google Search Console

- key guides that are already ranking where we want to stabilize those rankings

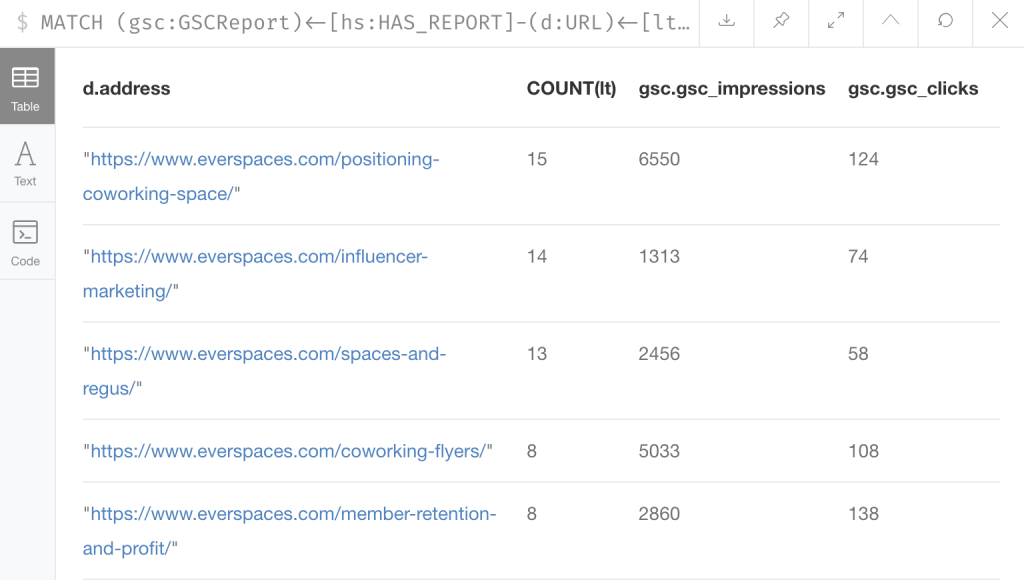

This query looks a bit complex, but its just looking for Google Search Console data for specific pages and the number of links from other pages to those pages.

MATCH (gsc:GSCReport)<-[hs:HAS_REPORT]-(d:URL)<-[lt:LINKS_TO]-(s:URL)

WHERE gsc.gsc_impressions > 1000 AND gsc.gsc_clicks < 150

AND d.address CONTAINS '//www.everspaces.com'

AND d.content CONTAINS 'html'

AND s.address CONTAINS '//www.everspaces.com'

AND s.content CONTAINS 'html'

RETURN d.address, COUNT(lt), gsc.gsc_impressions, gsc.gsc_clicks

ORDER BY COUNT(lt)

DESC LIMIT 10We’re then limiting results to be pages with more than 1000 impressions in Google search results but less than 150 clicks and sorting by count of inbound links:

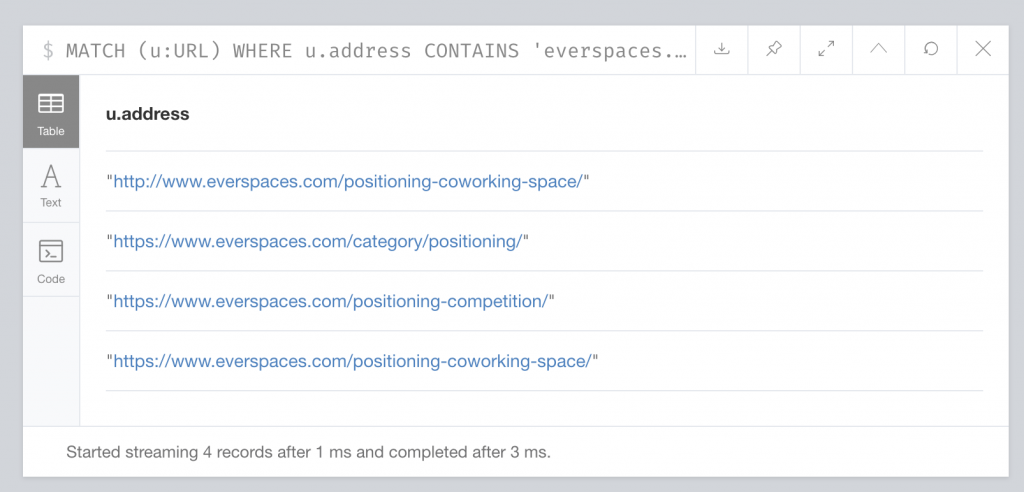

The positioning post is interesting – super high ratio of impressions to clicks! Let’s drill down into this one. A filter search on “positioning” in addresses:

Looks like we have a category called positioning – let’s see what that links to:

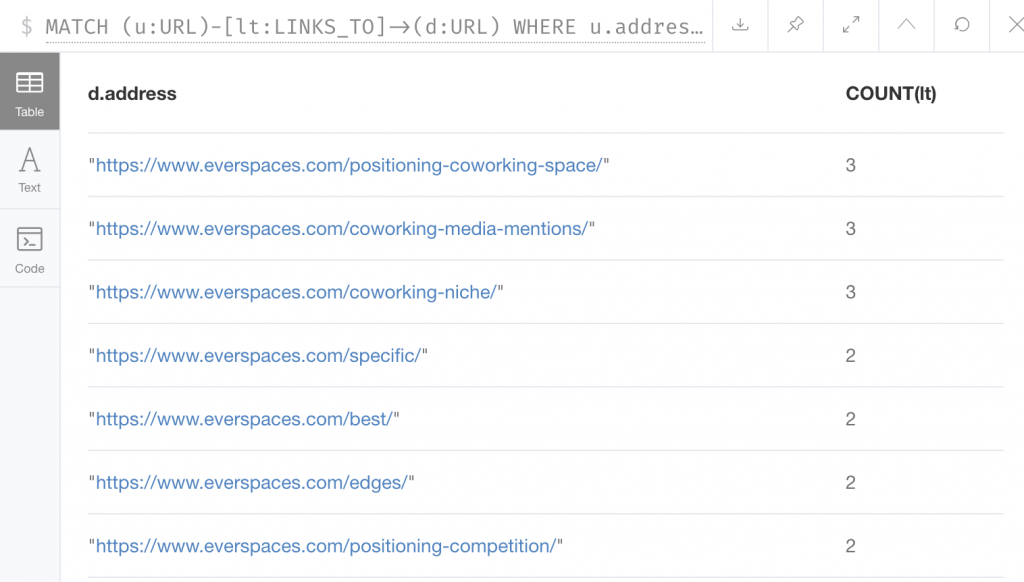

MATCH (u:URL)-[lt:LINKS_TO]->(d:URL) WHERE u.address = 'https://www.everspaces.com/category/positioning/'

AND u.content CONTAINS 'html'

AND d.content CONTAINS 'html'

RETURN DISTINCT d.address, COUNT(lt) ORDER BY COUNT(lt) DESC

At this point, we could just make sure that the rest of the pages in that category link to the page we’re trying to rank for “coworking positioning” related queries, or we could be a bit more strategic about it.

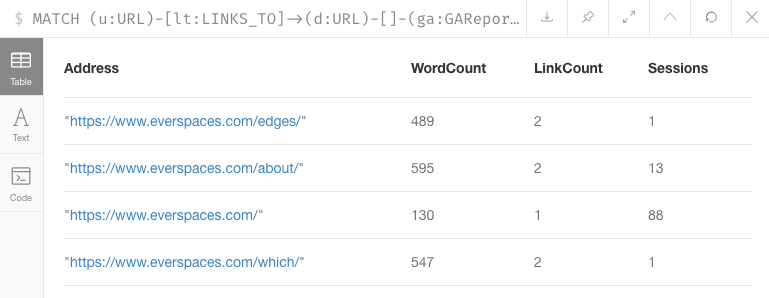

This shows low word count and low number of sessions from searvch posts linked to from “positioning” category:

We can see that /edges/ and /which/ pages are worth potentially consolidating into our main positioning post, while our other related posts are worth potentially linking to our main post.

We might also want to consolidate our list of “positioning” related posts as shown in our positioning category into a curated link list on our main positioning page, and then redirect positioning category page (and any pagination) to our new more robust positioning page.