Well. It’s 11:10pm and I’m digging through untitled drafts looking for the seed of something I can whip off to keep my somewhat daily email streak going.

Right now I’ve been learning/writing about a few things but they don’t feel punchy enough for an email:

A library of scripts for the machine learning things I’ve been playing with and proofs of concepts

I published the first case, but not sure if I’m going to keep it on the site as the pilot proof of concept we did wasn’t ultimately picked up for a bigger implementation by the client.

Content organization as a value engine for your existing content

This one I have been publishing about. But I’ve been working on a sort of opinionated framework for how sites should be structured and I’m having trouble not just caveating every decision.

There really isn’t a right answer, there’s just progress or hurting yourself by throwing more content on the pile.

Thoughts on digital privacy after Avast announced they’re shutting Jumpshot down.

The short version of which is,

1) People will start to understand how little privacy they have the more data we collect, combine and enrich about them. And outrage will ensue. I’m very interested in writing more and speculating about this but not sure how relevant that is to you, and

2) Privacy will become increasingly important to consumers and you really want to be on the right side of trusting relationships by getting express consent and finding a balance of having enough data to effectively serve the right users to the right parts of your content-product matrix.

The technical history of Google’s search patents and trying to understand how I might be able to apply the concepts on a much smaller dimension, not necessarily just for SEO purposes.

This part has been a weird trip. I basically put down SEO things and web design things in wanting to go more high brow with the direction of “content strategy” that seemed like a nice venn diagram overlap between commoditized developer and generalist SEO.

But trying to learn how to solve content problems algorithmically has brought me full circle to solving problems of searching for content that Google has been doing.

It’s fascinating and deflating.

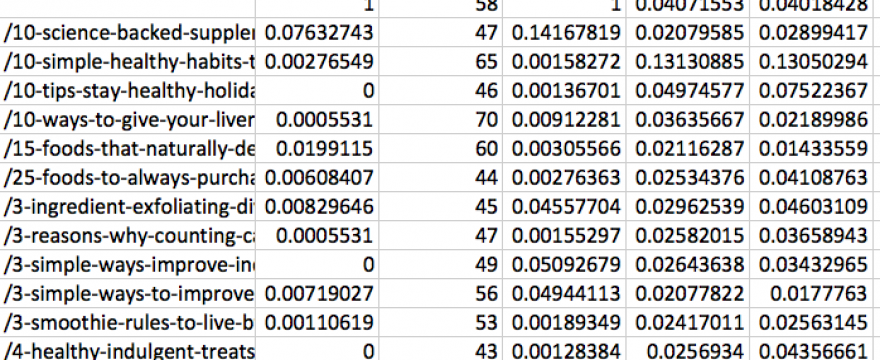

For example, I’m developing a workflow for internal linking analysis. I can run a simple PageRank / CheiRank script to score pages on a site that may be “leaking” PageRank or surface potential opportunities of pages to focus on for better internal linking.

I’m developing some thinking around how this could be used, like, if there is a no to weak correlation between PageRank and CheiRank on a site’s pages, is that a good indicator of poor internal linking health?

Originally I thought this was really neat. Like I was super pumped about all the graph algorithm things I might be able to do.

After digging through posts and language around Google patents though, I’m better understanding how much more effectively they are calculating these things now.

It’s been neat getting a deeper sense of the problems Google has been trying to solve for. But I see the above as-is is too simple of a calculation to mean much. I really need a more accurate, topic specific way to measure each link’s value and position on pages.

Or just have it be another quick datapoint during audits or before after for site changes.