A lot of the data preprocessing is trial and error. It’s creating some regular expression, or relying on some method from a library I don’t fully understand and then making sure it does more good than harm.

In that process I realized that instead of running and checking scripts against a 7MB CSV file, we should start with just a couple rows. So I created a script that copies the first few rows of our working CSV for quick use.

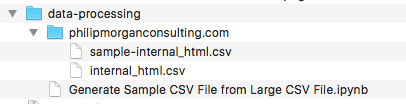

Here it’s called, “Generate Sample CSV File from Large CSV File.ipynb” and the contents are pretty short:

import pandas

file_path = 'philipmorganconsulting.com'

file_name = 'internal_html.csv'

rel_file = file_path + '/' + file_name

df = pandas.read_csv(rel_file, header=1, encoding='utf-8')

df.shape # check rows, headers with shape method

df.drop(df.tail(848).index, inplace=True)

df.shape # check rows, headers count again to check it worked

df.to_csv(file_path + '/' 'sample-' + file_name)

As long as your naming and folder structure are the same, you would just:

- replace the domain name in line 2 of the script and, of course, the folder name.

- run the first “df.shape” to get a count of the number of rows and headers returned.

- replace the 848 number with the number of rows you want removed off the end of the file. If you see (10,000, 10) that means you have 10,000 rows and 10 columns. If you only want 1,000 rows in your sample, then change df.drop(df.tail(848).index, inplace=True) to df.drop(df.tail(9,000).index, inplace=True)

- running the rest of the cells will output 1,000 rows of data to a new CSV called “sample-internal_html.csv.”

As I write this, I’m realizing v2 will need a more elegant way, maybe refactor to drop a percentage of the rows based on a simple percentage input, or keep x rows since files might be unwieldy.

But for my purposes right now, this work.